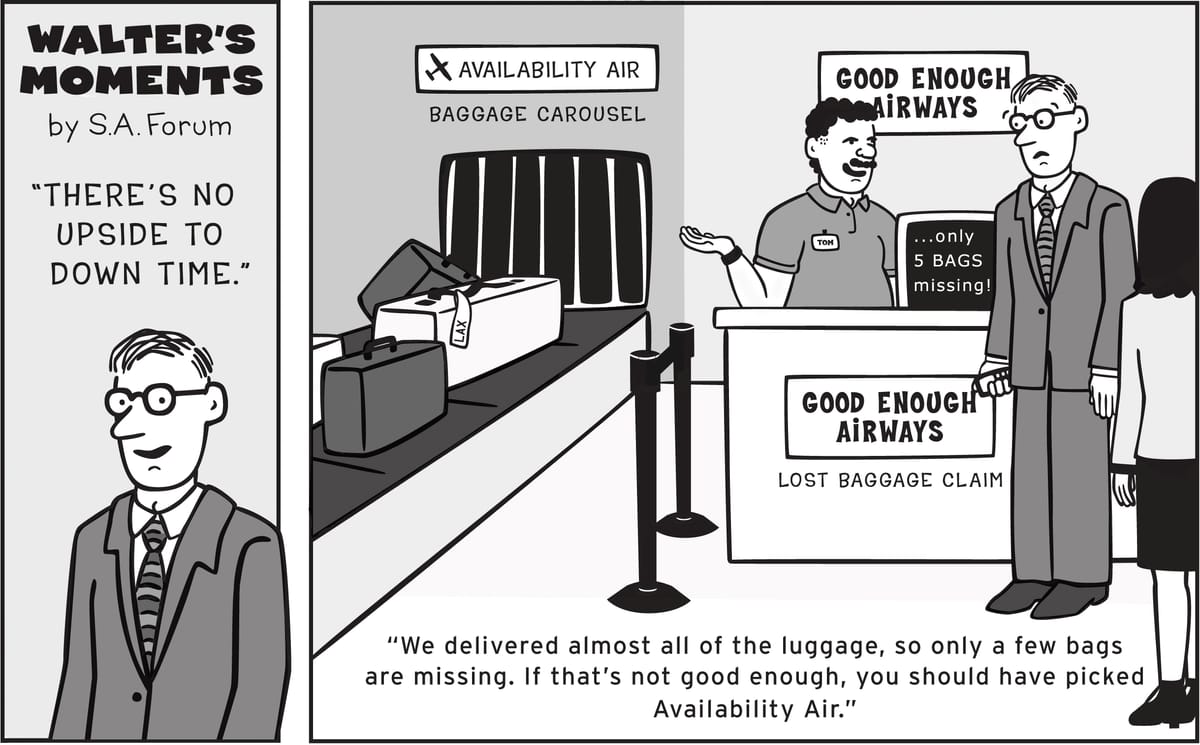

Building a Highly Available Kubernetes Cluster – Part 1: The Incident

This post is part of a series on transforming my home Kubernetes cluster into a Highly Available (HA) setup.

On my last blog post, I documented an incident with my cluster and wrote a post-mortem report to ensure it doesn’t happen again. So this blog post is about taking action on what was discovered on the post-mortem.

Lessons learned

When my only master node failed, my cluster was dead in the water. Worker nodes were still operational, but without an API server, they were practically useless—I couldn't schedule new workloads or manage existing ones. It was a frustrating reminder of how vulnerable a single control plane setup can be.

Let’s revisit something I mentioned in a previous post:

By default, K3s uses SQLite instead of etcd for its datastore. While SQLite is lightweight and simpler to manage, it lacks the distributed and highly available properties of etcd. In our current setup, the single control plane node means:If the control plane crashes, the Kubernetes API becomes unavailable.Worker nodes remain operational but cannot process updates or new workloads.The cluster is effectively down until the control plane is restored.

And that’s exactly what happened. So yeah, I kinda predicted the future. :)

Why HA Matters

If my hardware limited me to a single master node, I’d just live with it and try to survive. But having a single point of failure in my setup makes me itchy. Plus, I really want a reliable Kubernetes cluster at home—not just for peace of mind but to experiment and progress in my career as an SRE.

A Highly Available (HA) setup would solve this issue by:

- Ensuring the cluster remains functional even if one control plane node fails.

- Allowing me to perform updates without downtime (taking down one node at a time).

- Making my setup more resilient and closer to production-grade clusters.

This was the final push I needed. It was time to upgrade to HA.

The Plan

So, the council (me) decided: we need an HA setup. That means adding two more machines, bringing the total to three control planes.

Hardware Acquisitions

I picked up a ThinkCentre with an 8th-gen i7 and 16GB RAM. And, thanks to some lucky negotiations, my father-in-law "sponsored" me with an old workstation collecting dust in his house.

With these two additions, my plan is:

- Install Proxmox on the ThinkCentre to:

- Create a TrueNAS VM for persistent storage.

- Create a new VM with ubuntu server to server as a control plane

- Use the workstation as another control plane as bare-metal.

I’m also planning to deploy Democratic CSI so persistent volumes aren’t tied to local storage anymore.

Migration Checklist

Before I make any modifications, I need to back up some PVCs, particularly my V Rising server, where my friends and I have spent hours. Then I need to clean up Cloudflare records and tunnels to ensure a smooth transfer and avoid existing-records errors when migrating to the new cluster.

Action Items

- [ ] Back up PVCs.

- [ ] Decommission applications and Cloudflare config.

- [ ] Prepare new nodes.

- [ ] Reinstall OS for a clean setup on existing nodes and control plane.

- [ ] Deploy HA cluster via Ansible.

- [ ] Restore cluster state.

- [ ] HA configuration test.

Next up in this series: in Part 2 I'll explore more the hardware setup, networking concerns, and initial cluster configuration for HA. Stay tuned! 🚀