Learning from Failure, Why You Should Write Post-Mortems for Your Homelab

A Rough Homecoming

After a recent trip, I finally returned home a week ago. Between unpacking and catching up on household chores, I hadn’t found time to work on my cluster. Unfortunately, sometime during the week, a power outage caused all my machines to shut down unexpectedly. When I powered them back on, I quickly realized something was wrong—I couldn't access my master node via the Kubernetes API.

Digging deeper, I found that my master node was experiencing a kernel panic during boot. At that moment, I had no clue what had gone wrong, so I attempted to boot from a previous kernel version. Luckily, that worked, and I regained access. However, another issue surfaced—I couldn't update my system.

Before my trip, I had been installing GPU drivers on my master node to enable hardware acceleration for Jellyfin. In the process, I misconfigured some drivers, breaking package dependencies. This left my system in a partially broken state where updates couldn't be applied. I suspect that a kernel update was attempted but failed, and since the machine hadn’t been rebooted until the power outage, the issue only became apparent once it came back online.

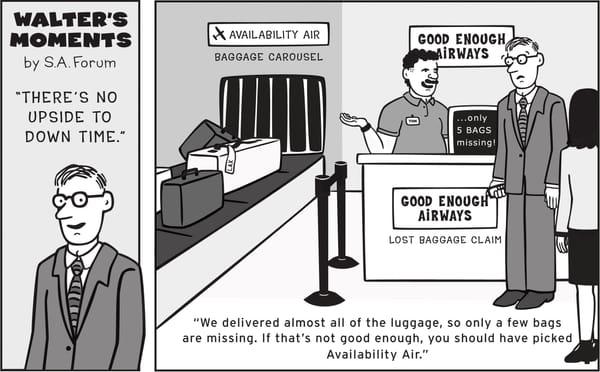

Dealing with this was tedious, but at the same time, it reminded me of something important: every failure is an opportunity to learn. This incident was a perfect chance to discuss Post-Mortem Reports and why they are a critical tool—not just in enterprise settings but also for homelabs.

What the Hell is a Post-Mortem?

As Site Reliability Engineers (SREs), we are constantly deploying changes and improvements to complex, distributed systems. With this velocity of change, incidents and outages are inevitable. When something breaks, we troubleshoot, identify the root cause, fix it, and restore services. But what happens afterward? Should we just move on?

Ignoring incidents and their root causes is a recipe for repeated failures. If issues aren’t documented and shared, they can resurface in different ways, potentially leading to larger system failures. Instead, we should take the opposite approach:

- Create a written record of the incident,

- Analyze its impact,

- Document the actions taken to fix it,

- Identify the root cause(s),

- Outline follow-up steps to prevent recurrence.

A post-mortem isn’t just a bureaucratic process—it’s a cultural practice. It’s not about assigning blame but about learning, iterating, and continuously improving our systems. Reliable infrastructure isn’t just about great engineering—it’s about evolving through failure.

How to Write a Solid Post-Mortem

There’s no universal standard for writing post-mortems, as each organization has its own format. However, according to the Google SRE Workbook [1], a good post-mortem should follow a structured format to ensure all critical aspects of an incident are captured. Here’s a typical structure:

1. General Information: Incident title, date, owner(s), affected teams, and the report status (draft/final).

2. Executive Summary: A high-level overview of the incident: what happened, its impact, root cause, and resolution.

3. Problem Summary: The duration of the incident, affected systems, user impact, and potential financial consequences.

4. Detection: How the issue was discovered (monitoring, alerts, user reports) and how long it took to identify the problem.

5. Resolution: The steps taken to mitigate and fully resolve the issue, including the time to recovery.

6. Root Cause Analysis: A deep dive into what went wrong, including any triggering events and underlying systemic failures.

7. Timeline / Recovery Efforts: A chronological breakdown of key events, actions taken, and decisions made during the incident response.

8. Lessons Learned: What went well, what didn’t, and any lucky breaks that helped mitigate the impact.

9. Action Items: Follow-up tasks to prevent recurrence, improve monitoring, and strengthen system resilience.

10. Additional Documentation (Optional): Logs, charts, relevant incident reports, and a glossary of technical terms.

The Blame Game: Avoiding the Witch Hunt Mentality

A good post-mortem is never about pointing fingers. It's easy to get frustrated when human errors cause outages, but mistakes will happen. The real question is:

- How did our system allow this failure to occur?

- How can we make it more resilient in the future?

Rather than focusing on individual blame, a post-mortem should identify gaps in system design, processes, or safeguards that allowed the failure to happen. Every incident is a learning opportunity—one that can drive real improvements in infrastructure, monitoring, and automation.

This is the essence of continuous improvement. By fostering a blame-free culture, we encourage transparency, accountability, and collaboration. The strongest systems aren’t the ones that never fail—they are the ones that fail safely and recover quickly.

Post-Mortem for This Incident

I’ve written a full post-mortem for my GPU driver incident, and I’ll continue documenting every major event in my cluster. This will not only help me track my problem-solving process but also share insights that others can apply to their own homelabs and careers.

You can check out the full post-mortem here:

➡️ GPU Driver Incident

Final Thoughts

Failures will happen—whether you’re running a homelab or managing large-scale cloud infrastructure. But what separates good engineers from great engineers is how they learn from failures. Writing post-mortems not only strengthens your systems but also makes you a better problem-solver.

So the next time something breaks in your homelab, don’t just fix it and move on. Write it down. Learn from it. Improve. 🚀

✉️ Over to You:

- Have you ever experienced a frustrating incident in your homelab?

- Do you document your failures, or do you just move on?

- What’s your biggest takeaway from your worst outage?

Drop a comment below—I’d love to hear your thoughts! 👇

References

[1] Google Site Reliability Engineering Team, Postmortem Culture, Google SRE Workbook. Available: https://sre.google/workbook/postmortem-culture/, Accessed: Mar. 12, 2025.